In this blog post, Thorsten Jelinek explores the EU and the US approaches to AI governance, focusing on their converging yet distinctly different pathways.

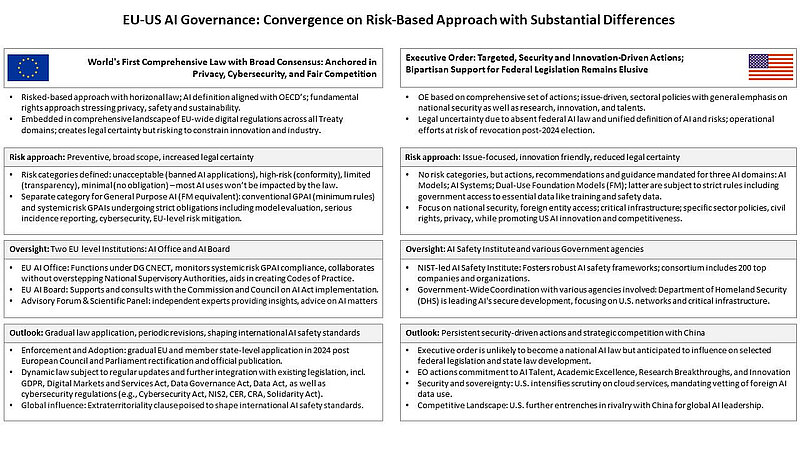

As artificial intelligence (AI) continues to reshape our world, the imperative for robust governance frameworks becomes increasingly clear. Bridging the regulatory divides between the European Union and the United States, both of which are steering towards a risk-based approach, sets the stage for the nuanced discussions that follow on their converging yet distinctly different pathways to AI regulation. The EU has established a global precedent with its AI Act, whereas the U.S. Presidential Executive Order on AI highlights the recognised need for regulatory guardrails but also underscores the challenge of achieving bipartisan consensus.

EU's AI Act

The EU's AI Act (the “Act”), confirmed by the European Parliament on March 13, 2024, establishes a comprehensive regulatory framework for artificial intelligence, marking it the first of its kind globally. It embodies a preventative principle with an extensive scope to increase legal certainty. The Act introduces a multi-tiered risk framework with stringent rules for high-risk applications, lighter touch regulations, and recommendations for less critical AI uses. Importantly, the Act exempts open-source models unless they are deployed in high-risk contexts. Some companies, as shown in the case of Mistral forging a partnership with Microsoft, might exploit the open-source exemption as a loophole, even though such alliances are typically formed to boost competitiveness. This approach is underpinned by a new oversight mechanism, including two EU-level institutions, the AI Office and AI Board, responsible for ensuring compliance and facilitating the development of Codes of Practice without directly overstepping “National Supervisory Authorities” of the member states. At its core, the Act stipulates that the development and deployment of AI must adhere to lawful, ethical, and robust practices, ensuring strict compliance with the EU’s fundamental rights and social values. Already this year, that is six months after the Act's adoption, AI systems classified as posing an unacceptable risk—including those that manipulate human behaviour or enable social credit scoring—will be prohibited.

While the Act is a landmark achievement, it remains controversial. Critiques, such as DigitalEurope—a lobby group representing 45,000 digital businesses—have argued that it could stifle innovation and competitiveness. Similarly, shortly before the law's adoption, Italy, Germany, and France expressed concerns, particularly through their joint non-paper “An innovation-friendly approach based on European values for the AI Act,” voicing resistance to stringent regulation of foundational models and pushed for “mandatory self-regulation.” On the other hand, proponents assert that strong guardrails actually spur innovation by creating clear frameworks within which developers can operate safely and creatively. Regardless, most applications are expected to be in the lowest category and will not face any mandatory obligations. However, the Act’s extraterritoriality clause, which means that the Act will govern both AI systems operating in the EU as well as foreign systems whose output enters the EU market, could likely cause frictions, especially with the US, as it is perceived as protectionist. This is the flipside of the EU's push towards digital sovereignty, encapsulated by comprehensive regulations on privacy, cybersecurity, and digital markets.

The EU's AI strategy aims for both internal coherence among its member states and to set a global benchmark in AI governance. Its thorough approach introduces the Act in stages over a 36-month period post-enactment, with periodic updates to ensure a smooth transition to compliance for member states, AI providers, and users. Beyond bolstering AI safety within the EU, the strategy also seeks to elevate global AI safety standards, leveraging the Act's extraterritorial clause. This clause extends the Act's reach to AI systems operating outside the EU but impacting the EU market, underscoring the EU's influence on worldwide AI practices.

US's Presidential Executive Order

In contrast, the United States has taken a different approach. Rather than enacting a comprehensive, nationwide law, the US government has promulgated a Presidential Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (“Executive Order"), encompassing a broad array of guidelines, recommendations, and specific actions. This strategy aligns with the US precedent of lacking federal laws in other pivotal areas of digital governance. Unlike the European Union's General Data Protection Regulation (GDPR), the US does not possess a unified federal data privacy law, relying instead on a mosaic of federal and state laws and sector-specific rules. Similarly, there is no direct federal cybersecurity legislation analogous to the EU's Network and Information Systems (NIS2) Directive; cybersecurity in the US is managed through sector-specific regulations, executive orders, and various initiatives. Despite growing recognition of the need for a risk-based approach, bipartisan support for comprehensive federal legislation in these areas remains elusive.

While the absence of federal legislation introduces legal uncertainty, it also allows flexibility and a nuanced approach to AI safety and security, notably for high-risk applications such as Dual-Use Foundation Models. The Executive Order is not only a set of targeted restrictions with sectoral policies, like in transportation and healthcare, but also about fostering AI talent, education, research, and innovation, and thus enhancing US competitiveness. In contrast, the EU AI Act's focus does not extend to competitive factors, reflecting the EU's broader regulatory stance on digitalisation that prioritises ethical and safety considerations. Despite this, security remains a critical concern, as evidenced by proposed mandates requiring US cloud companies to vet and potentially limit foreign access to AI training data centres. It is also evident in provisions ensuring government access to AI training and safety data. This strategy underscores a deliberate effort to protect US interests amidst the dynamic AI domain and intense competition with China for global AI dominance. It also reflects a strategic response to extraterritorial reach of the EU AI Act, which is perceived as protectionist, compelling major US AI firms like Microsoft, OpenAI, Google, DeepMind, and Anthropic to align with EU regulations.

Due to the lack of a single law, US oversight also differs. The Executive Order underscores AI’s significance, especially in maintaining a competitive edge in AI while ensuring national security. It seeks to coordinate multiple agencies by establishing a comprehensive government-wide strategy, with the Department of Homeland Security (DHS) at the forefront of ensuring the secure development and deployment of AI, particularly within US networks and critical infrastructure. The establishment of the AI Safety Institute, directed by the National Institute of Standards and Technology (NIST) and backed by a consortium of 200 leading organisations, including prominent US AI firms, signifies a crucial move towards developing a robust AI safety framework. This consortium aims to advise on safety standards and ensures advocacy of industry interests.

It is crucial to recognise that the Executive Order does not exist in isolation but builds upon earlier foundational efforts, notably the proposed AI Bill of Rights and the NIST AI Risk Management Framework (RMF). These prior initiatives provide a structured basis for the Executive Order's directives. In addition, The Office of Management and Budget (OMB) AI Guidance further operationalises these principles by mandating federal agencies to develop specific strategies for AI deployment, conduct impact assessments for AI systems, and appoint Chief AI Officers. However, those initiatives are non-binding. The US approach has another major drawback: the Executive Order still needs to consistently mesh with other federal regulations. Its effectiveness is subject to the changing political will of the next administration, which can revoke the Executive Order. The proposed National AI Commission Act could be a starting point to bolster the Executive Order, setting the stage for enduring, enforceable AI governance. In contrast, the EU's extensive regulatory framework, which includes data privacy, cybersecurity, and market fairness provisions, provides a robust foundation that enhances the legally binding AI Act.

Conclusion

The contrasting regulatory landscapes between the EU and the US underscores the vital need for interoperability in AI governance—a framework proposed in the “Interim Report: Governing AI for Humanity” by the UN Secretary-General's AI Advisory Body. A harmonised approach is crucial for maintaining the delicate balance between ensuring AI safety and fostering innovation and competitiveness, protecting digital sovereignty while promoting market and technology openness. Such interoperability is key to achieving transatlantic consistency in AI development and deployment, paving the way for global standards that benefit all. To foster global AI governance, the EU and US must also ensure their regulatory approaches are not protectionist, seeking interoperability and openness with major countries, regions, and especially the Global South, enhancing worldwide collaboration in AI development and deployment.

Teaser photo by Steve Johnson on Unsplash